The Mechanical Turk – or the invisible, low-cost labour of automation

In 1769, in the midst of the Enlightenment, the Hungarian inventor Wolfgang von Kempelen approached the court of the Empress Maria Theresa of Austria with an invention which would amaze spectators across Europe for years to come. Inspired by the era’s fascination with “automatons” (Schaffer, 1999) – automated machines that perform human tasks – von Kempelen had built an automated chess playing machine dubbed “der Schachtürke”, translated as “the Mechanical Turk”. The Mechanical Turk consisted of a large cabinet with chessboard and pawns. Seated behind the cabinet was a mechanical model of a Turkish chess player, smoking a pipe and watching the chessboard: the automated chess player. To prove the working of the Mechanical Turk, von Kempelen as the operator of the machine would open the cabinet from all sides, revealing a wide arrangement of cogs, gears and levers. Moreover, the model of the Turkish chess player could be stripped apart, revealing its inner-workings and most importantly revealing that indeed everything with entirely mechanic and automated. After von Kempelen had showcased the inside of the automaton to the audience, he would wound-up the machine, upon which the Turk could commence its game of chess against any opponent, often chosen from the audience.

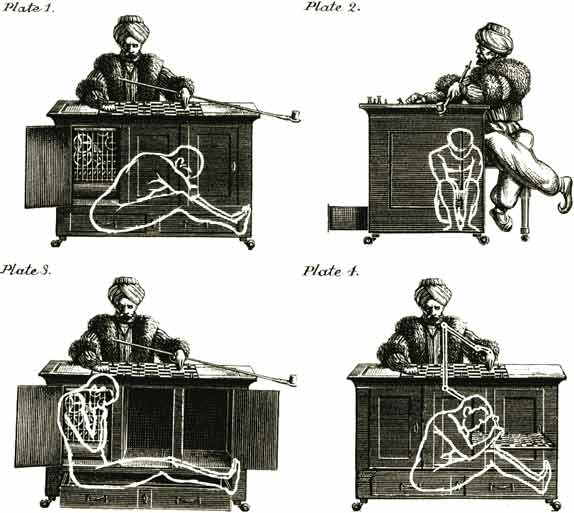

Moving the pawns, tipping the board, nodding and even bowing – all movements were automated by the Mechanical Turk, much to the amazement of the present audience, while also winning almost every game of chess. Even more complex problems, like the knight’s tour – a sequence of moves where the knight has to move on every square exactly once – seemed no issue for the automaton. Touring through the courts and fairs of Europe, the Mechanical Turk quickly rose to prominence, playing amongst others against the emperor of Russia, Paul I., Benjamin Franklin at that time US-ambassador to France, or Napoleon I of France. The exact workings of the Mechanical Turk however, were cause for academic and politic discussion for decades. Even though von Kempelen always referred to its functioning as a mechanical trick, speculations ranged from hypnosis, magnetism, a small dwarf hidden inside, or some other kind of intelligent agent within the automaton. Only around the 1850s, after many hypotheses and studies were published, were the inner-functions of the Mechanical Turk revealed: the chess cabinet was in fact significantly larger than its appearance made it seem to be, holding secret spaces and doors and thus providing enough room for a full-grown adult to hide inside – at most times highly skilled chess players (see Fig.1 for a schematic drawing of the interior of the Mechanical Turk). The Mechanical Turk was indeed just a trick, an illusion of automation and intelligence, which bewildered its audiences through black-boxing its actual operations.

Figure 1: Drawing of von Kempelen's Mechanical Turk (Villatoro 2013)

It’s a kind of magic

Fast forward to 1997 and IBM’s supercomputer Deep Blue enters the scene. Built as a chess playing supercomputer and after several failed attempts, Deep Blue finally managed to beat reigning chess world champion Garry Kasparov in a six-game match. While this achievement is considered as one of the great milestones in the history of AI, there is a stunning analogy to the concept of the Mechanical Turk that is often dismissed: both were machines defeating humans, but their approaches heavily relied on a significant amount of human input to be successful in their functioning. The Mechanical Turk could only function with a human inside the machine, operating the mechanic model. Deep Blue relied on the endless games chess Grandmasters had played before, providing the machine with a database of openings, moves and combinations from which it could learn. While their achievements and sheer wonders have amazed their audiences, the necessary operations remain in the dark, maintaining the aura of some kind of magic or superhuman technology. The same goes by the way for AlphaGo AI, the Go playing AI developed by DeepMind at Alphabet (formerly Google), which also relied on the recorded knowledge of previous games played by humans – although the new iterations of AlphaGo (AlphaGo Zero, AlphaZero and MuZero) have learned the game without any previous input entirely through Deep Learning.

The hidden workers of AI

What this analogy shows is that even though AI technologies convey the idea of being fully automated, they simultaneously hide the amount of labour that needs to be invested for the system to be operational. Both the hardware as well as software components of AI systems consist of exploitive and hidden forms of labour. Be that through the extraction of rare earths elements in mines in countries like China, Myanmar or Brazil. Or through the hidden labour of data preparation and data labelling for the training and maintenance of AI systems, done through crowd-work and micro-tasking. Workers, spread around the globe are tasked with highly repetitive micro-tasks such as collecting data, labelling images, or testing and validating algorithms. Ironically, this data work is often mediated by crowdsourcing platforms such as Amazon’s Mechanical Turk, a marketplace where companies or individuals called “requesters” publish the work they want to outsource, set the pay – generally just a couple of cents per task – while “distributed workers” can choose which tasks to work on, quite often earning less than their local minimum wage (Crawford 2021).

Potemkin AI

At the announcement of Amazon’s Mechanical Turk in 2006, the service has been described by Jeff Bezos as “[a]rtificial artificial intelligence” which “[r]everses the roles of computers and humans.” As with the original Mechanical Turk, deception lies at its core, black-boxing the work that is performed for the automated operations of AI. The amazement and magic of AI today is only made possible through these unregulated conditions of exploitive, hidden and distributed labour, similarly to van Kampelen’s Mechanical Turk, which was only made possible through the hidden chess player inside the cabinet. Nowadays, some services even go as far as promoting their technology as automated and AI enabled, while being entirely operated by humans performing micro-work at almost no cost, enabled by platforms such as Amazon’s Mechanical Turk. If it is cheaper, companies disguise their service as a Potemkin AI and have humans performing as chatbots or converting voice messages into text messages – all the while holding up the shiny façade of the magic of automation.

Fearing automation

While the discussions of AI, automation and labour have often been connected to a fear of loss of labour, it appears as if this fear is not justified – at least not at the current state of technology. Which doesn’t mean that AI induced automation shouldn’t be cause for concern. Low-cost, hidden and repetitive manual labour is core for these technologies to work and will also be in the near future. Be it data workers that collect, clean, prepare and label datasets for AI training, of other kinds of workers tasked with correcting and tweaking the AI generated results – as is for example the case in the translation industry, where translators are increasingly assigned with proofreading the translations provided by AI services, all for a fraction of the costs of their translations. In particular when platforms like the Mechanical Turk provide worldwide, 24/7 outsourcing possibilities, including the access to a global, unregulated and highly competitive labour market, the concerns with automation are not the loss of labour. Instead, it should rather be about the increase of a global working poor, hiding the amount of labour that is necessary to enable the automation of tasks and operations. All just to maintain the façade of a truly magical technology called (artificial) artificial intelligence.

Sources:

Crawford, Kate (2021), “Atlas of AI. Power, Politics, and the Planetary Costs of Artificial Intelligence”. Yale University Press.

Schaffer, Simon (1999), “Enlightened Automata”, In: The Sciences in Enlightened Europe, edited by William Clark, Jan Golinski, and Simon Schaffer, Chicago University Press, p. 126-165.